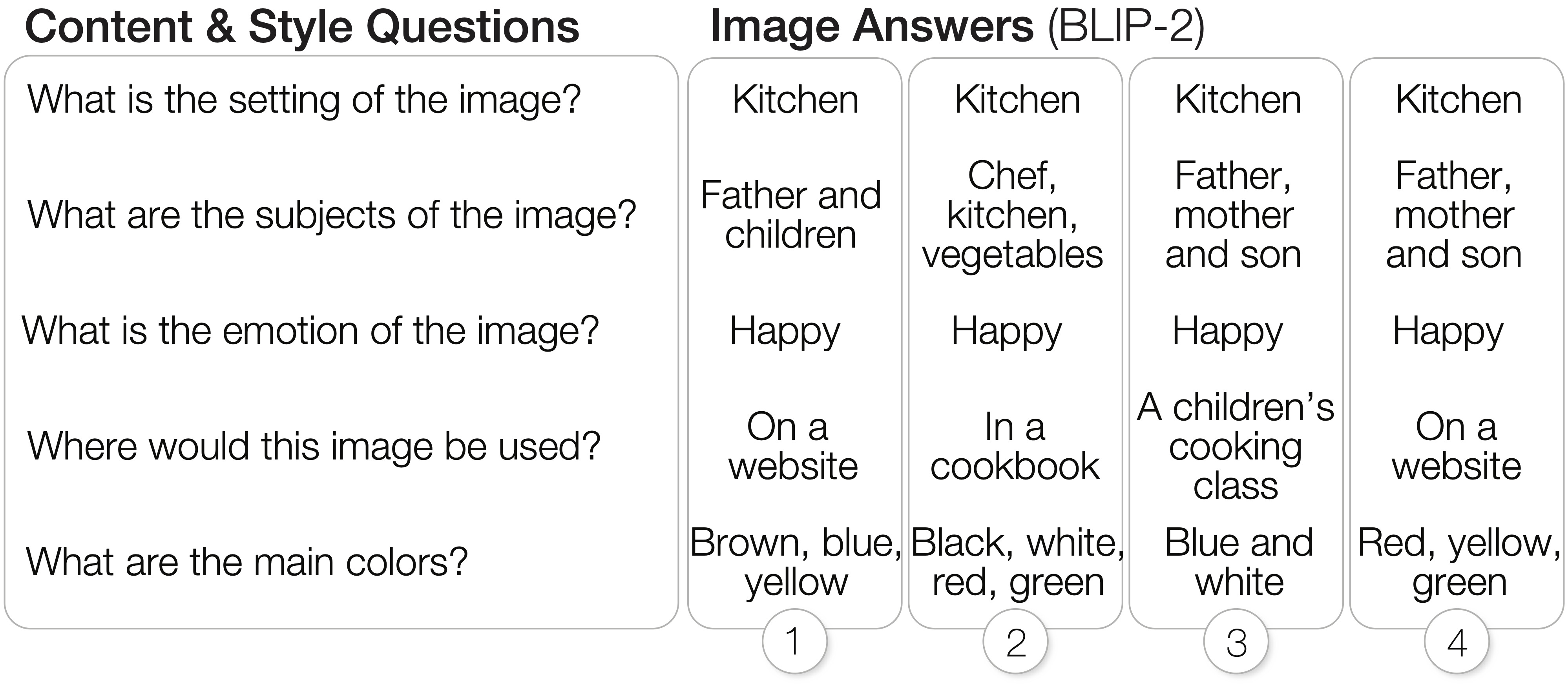

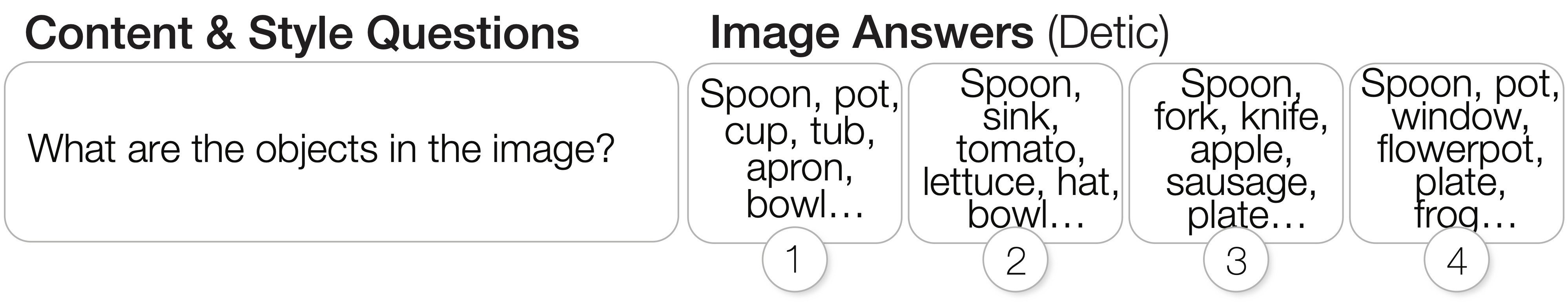

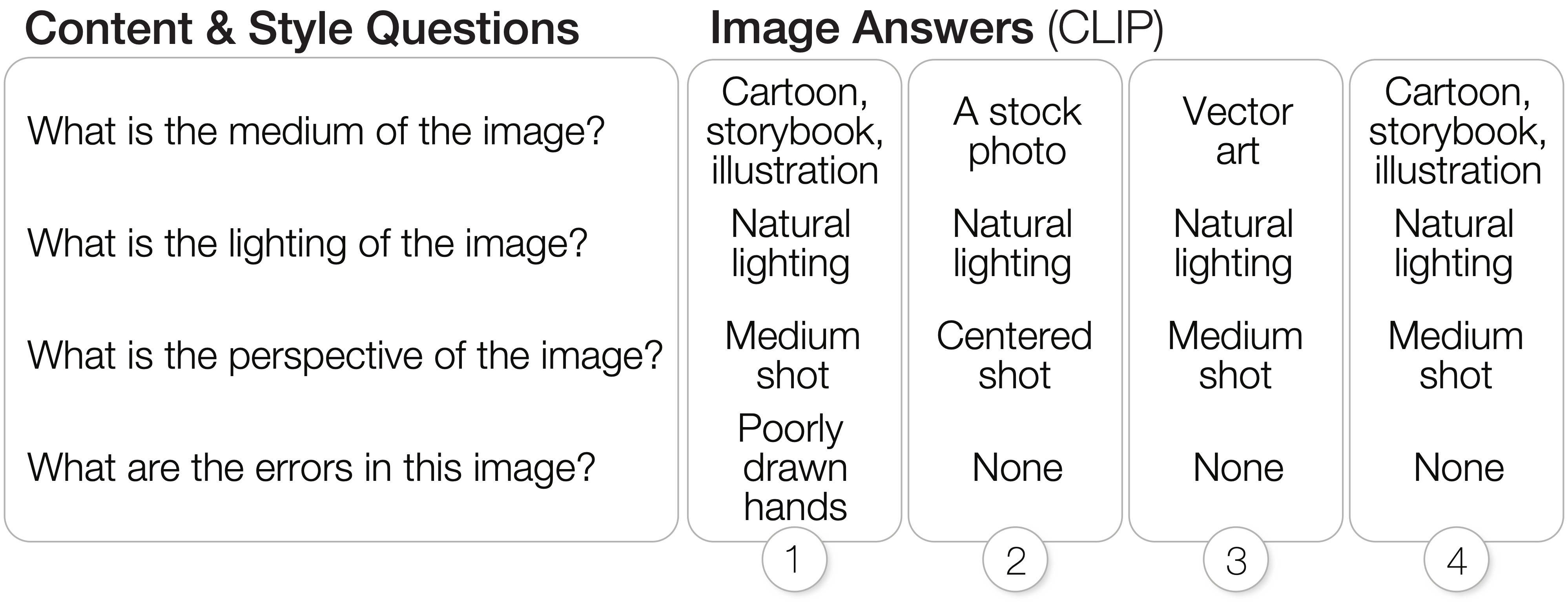

Blind and low vision (BLV) creators use images to communicate with sighted audiences. However, creating or retrieving images is challenging for BLV creators as it is difficult to use authoring tools or assess image search results. Thus, creators limit the types of images they create or recruit sighted collaborators. While text-to-image generation models let creators generate high-fidelity images based on a text description (i.e. prompt), it is difficult to assess the content and quality of generated images. We present GenAssist, a system to make text-to-image generation accessible. Using our interface, creators can verify whether generated image candidates followed the prompt, access additional details in the image not specified in the prompt, and skim a summary of similarities and differences between image candidates. To power the interface, GenAssist uses a large language model to generate visual questions, vision-language models to extract answers, and a large language model to summarize the results. Our study with 12 BLV creators demonstrated that GenAssist enables and simplifies the process of image selection and generation, making visual authoring more accessible to all.

@inproceedings{huh2023genassist,

title={GenAssist: Making Image Generation Accessible},

author={Huh, Mina and Peng, Yi-Hao and Pavel, Amy},

booktitle={Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology},

pages={1--17},

year={2023}

}