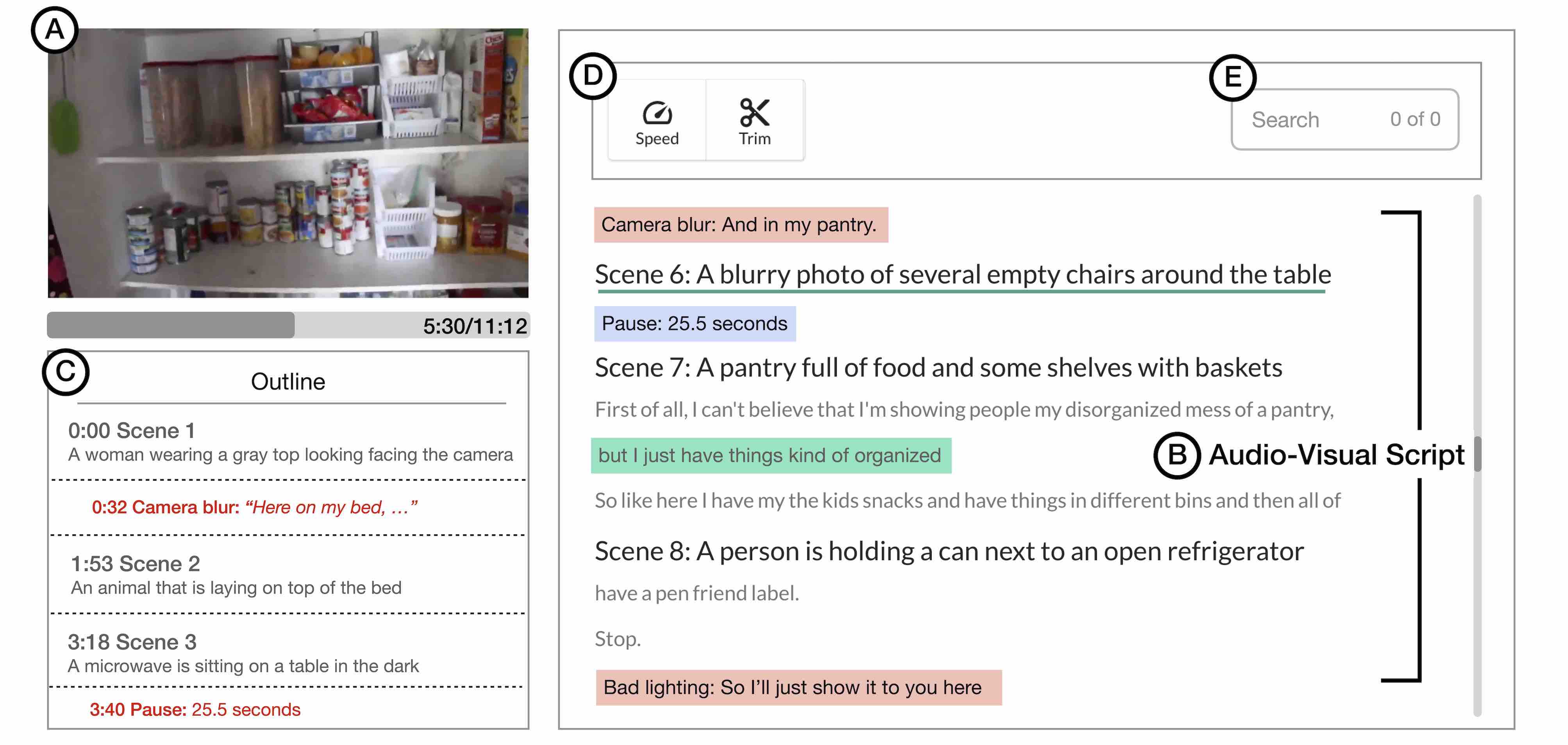

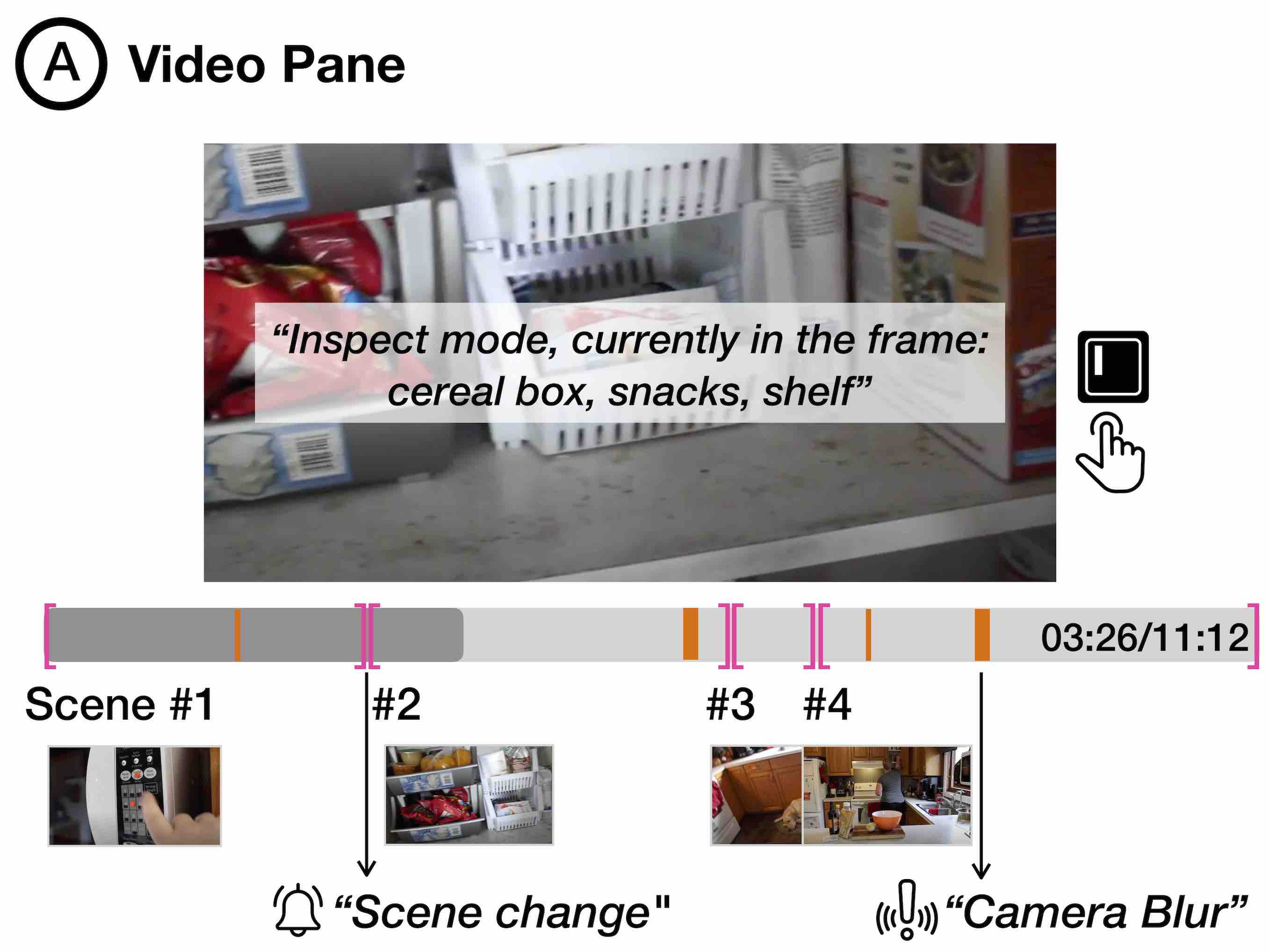

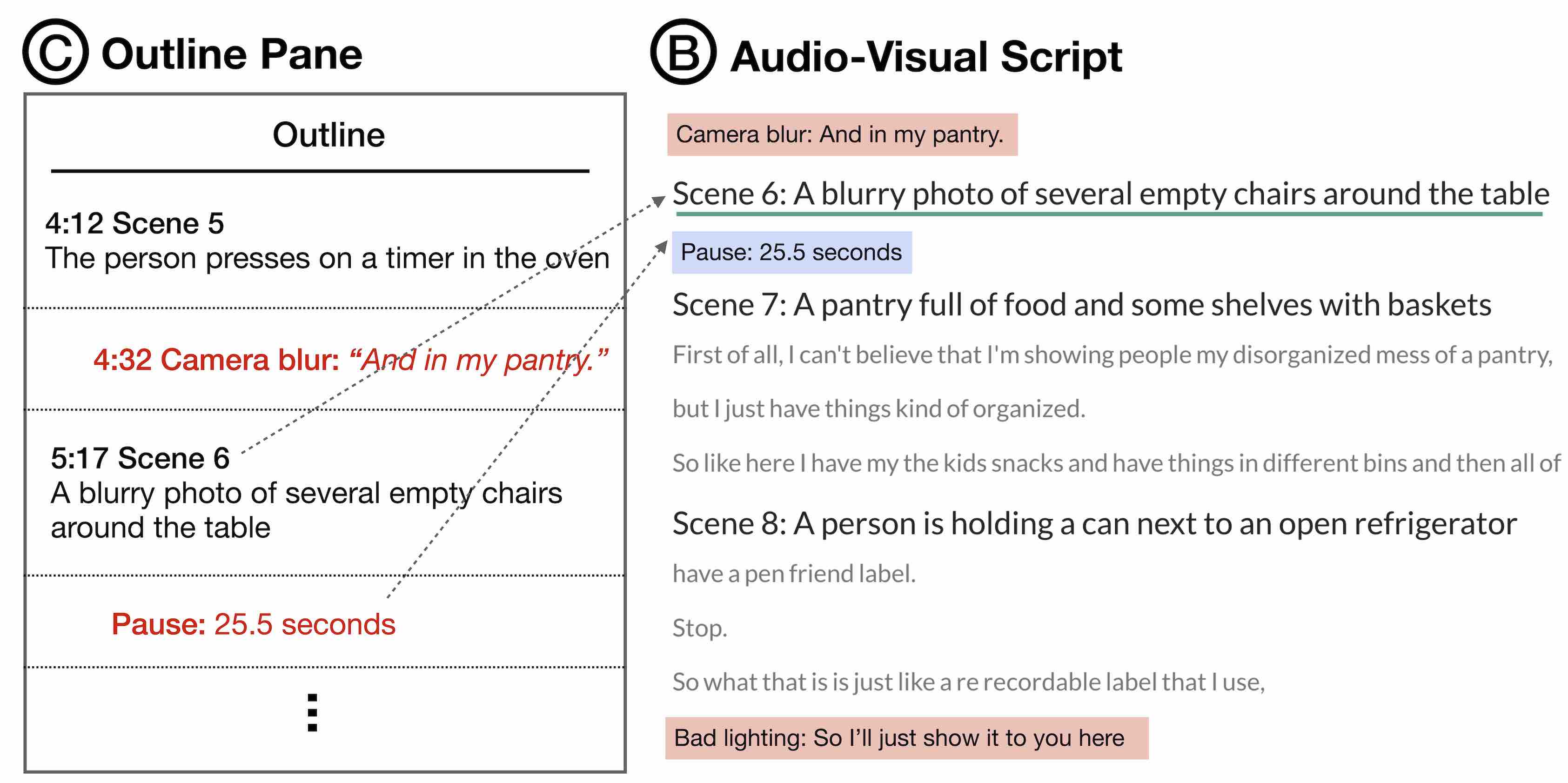

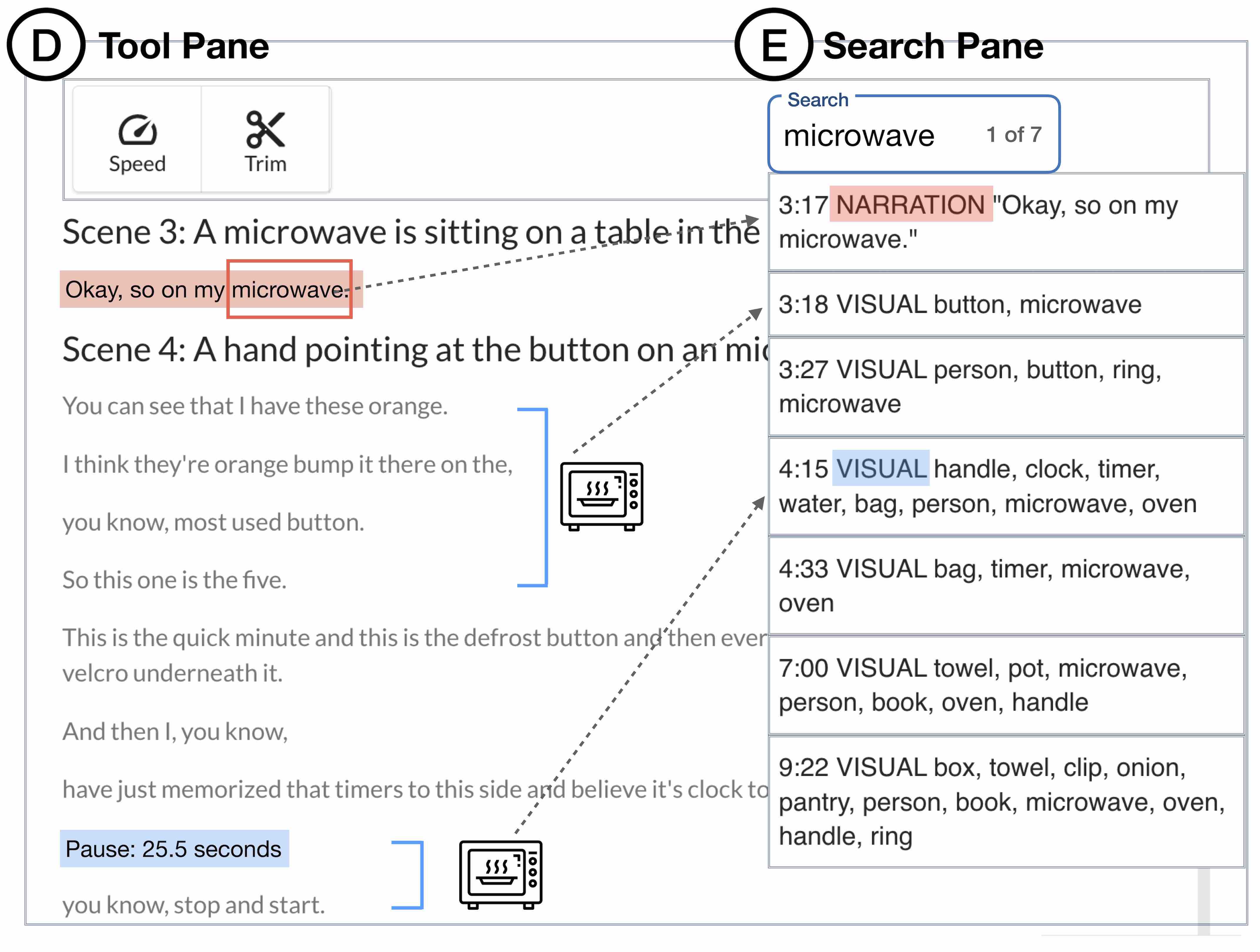

Sighted and blind and low vision (BLV) creators alike use videos to communicate with broad audiences. Yet, video editing remains inaccessible to BLV creators. Our formative study revealed that current video editing tools make it difficult to access the visual content, assess the visual quality, and efficiently navigate the timeline. We present AVscript, an accessible text-based video editor. AVscript enables users to edit their video using a script that embeds the video's visual content, visual errors (e.g., dark or blurred footage), and speech. Users can also efficiently navigate between scenes and visual errors or locate objects in the frame or spoken words of interest. A comparison study (N=12) showed that AVscript significantly lowered BLV creators' mental demands while increasing confidence and independence in video editing. We further demonstrate the potential of AVscript through an exploratory study (N=3) where BLV creators edited their own footage.

@inproceedings{10.1145/3544548.3581494,

author = {Huh, Mina and Yang, Saelyne and Peng, Yi-Hao and Chen, Xiang 'Anthony' and Kim, Young-Ho and Pavel, Amy},

title = {AVscript: Accessible Video Editing with Audio-Visual Scripts},

year = {2023},

isbn = {9781450394215},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3544548.3581494},

doi = {10.1145/3544548.3581494},

abstract = {Sighted and blind and low vision (BLV) creators alike use videos to communicate with broad audiences. Yet, video editing remains inaccessible to BLV creators. Our formative study revealed that current video editing tools make it difficult to access the visual content, assess the visual quality, and efficiently navigate the timeline. We present AVscript, an accessible text-based video editor. AVscript enables users to edit their video using a script that embeds the video’s visual content, visual errors (e.g., dark or blurred footage), and speech. Users can also efficiently navigate between scenes and visual errors or locate objects in the frame or spoken words of interest. A comparison study (N=12) showed that AVscript significantly lowered BLV creators’ mental demands while increasing confidence and independence in video editing. We further demonstrate the potential of AVscript through an exploratory study (N=3) where BLV creators edited their own footage.},

booktitle = {Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems},

articleno = {796},

numpages = {17},

keywords = {video, accessibility, authoring tools},

location = {Hamburg, Germany},

series = {CHI '23}

}